The Autonomy Engine

Continuous Self-Learning

A closed-loop pipeline that turns real-world edge cases into "hard" synthetic training data, automating the fine-tuning of your autonomy stack.

"Stop training on empty miles. Start training on the edge."

Traditional autonomy development stalls because real-world data is expensive to collect and rarely captures the dangerous "long-tail" events that break your AI.

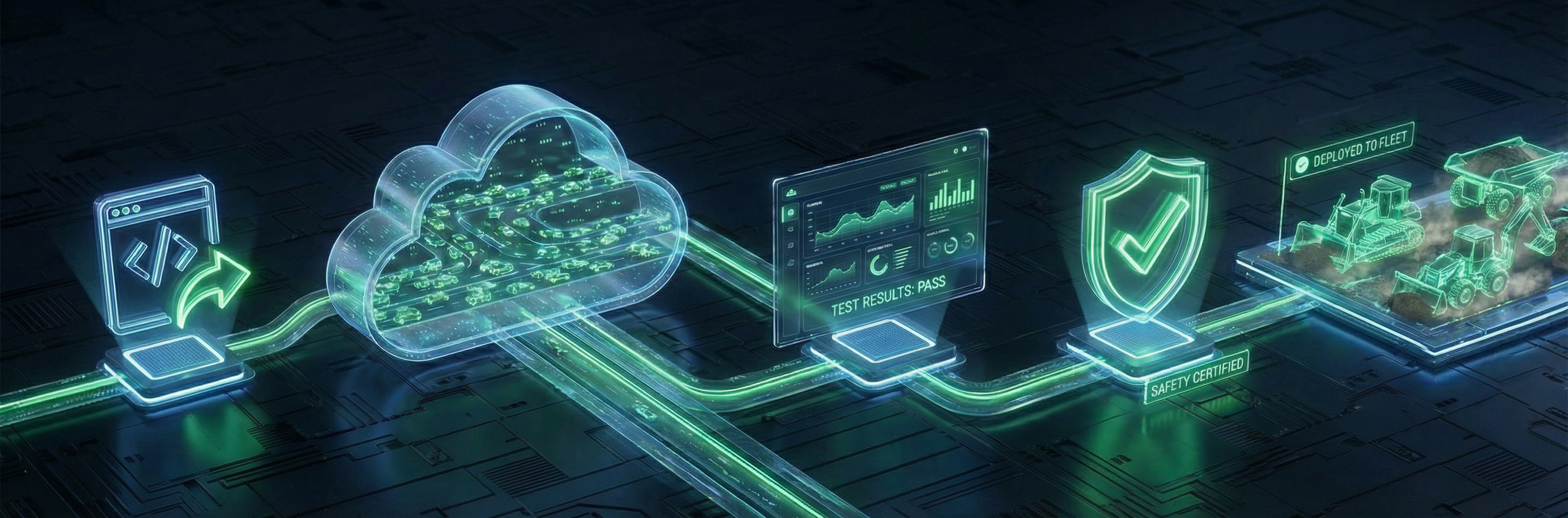

Cognitron PhysAI replaces this linear process with an Active Learning Loop. By continuously comparing your model's predictions against real-world outcomes, we identify exactly where your AI is weak. Our platform then automatically generates "Adversarial Synthetic Data"—specifically crafted, high-difficulty scenarios that target those weaknesses.

Synthetic Bootstrapping

The Cold Start

Active Deployment

Data Harvesting

Real-to-Sim

Compare & Correct

Adversarial Amplification

The "Harder" Data

Stage 1: Synthetic Bootstrapping

Physics-Driven Generation

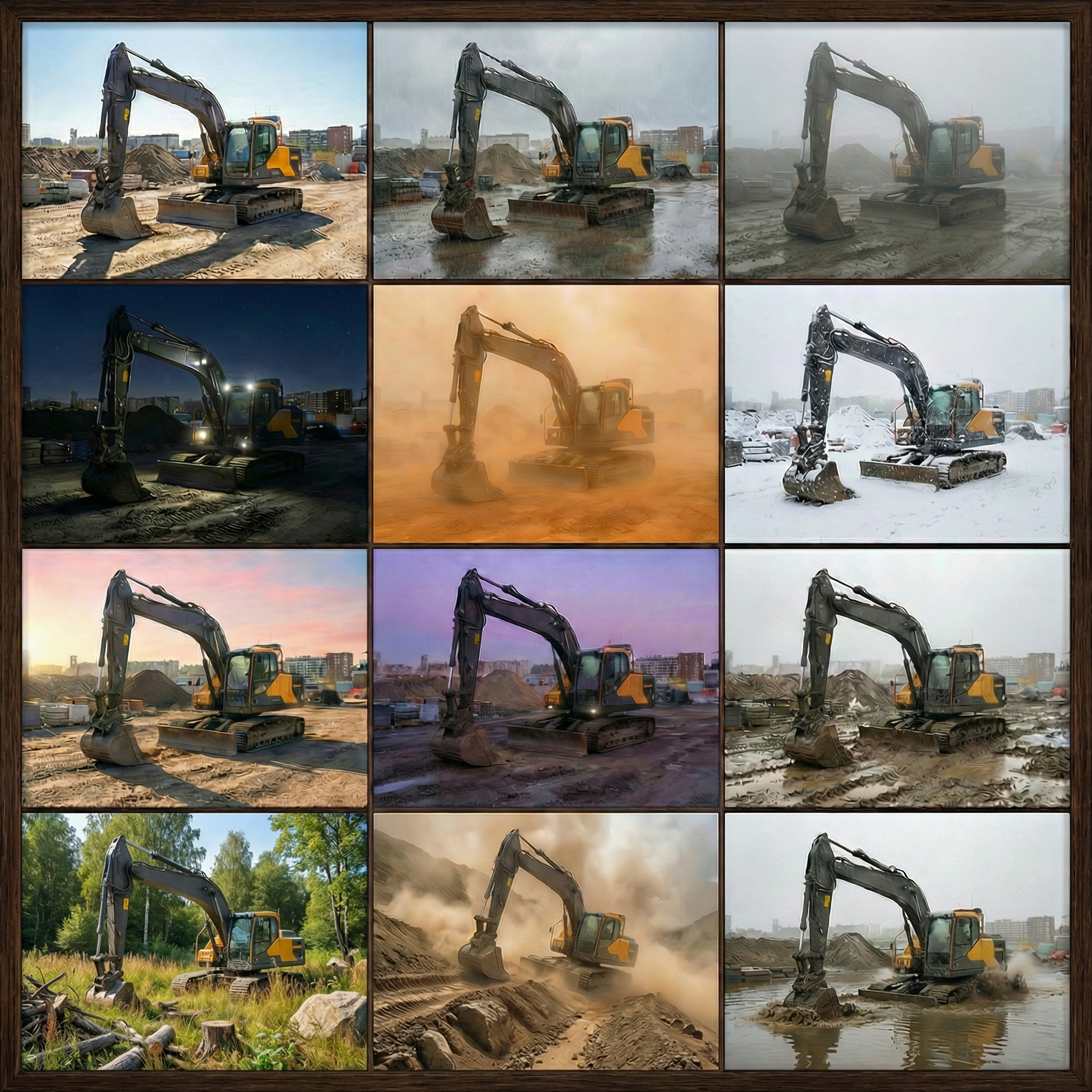

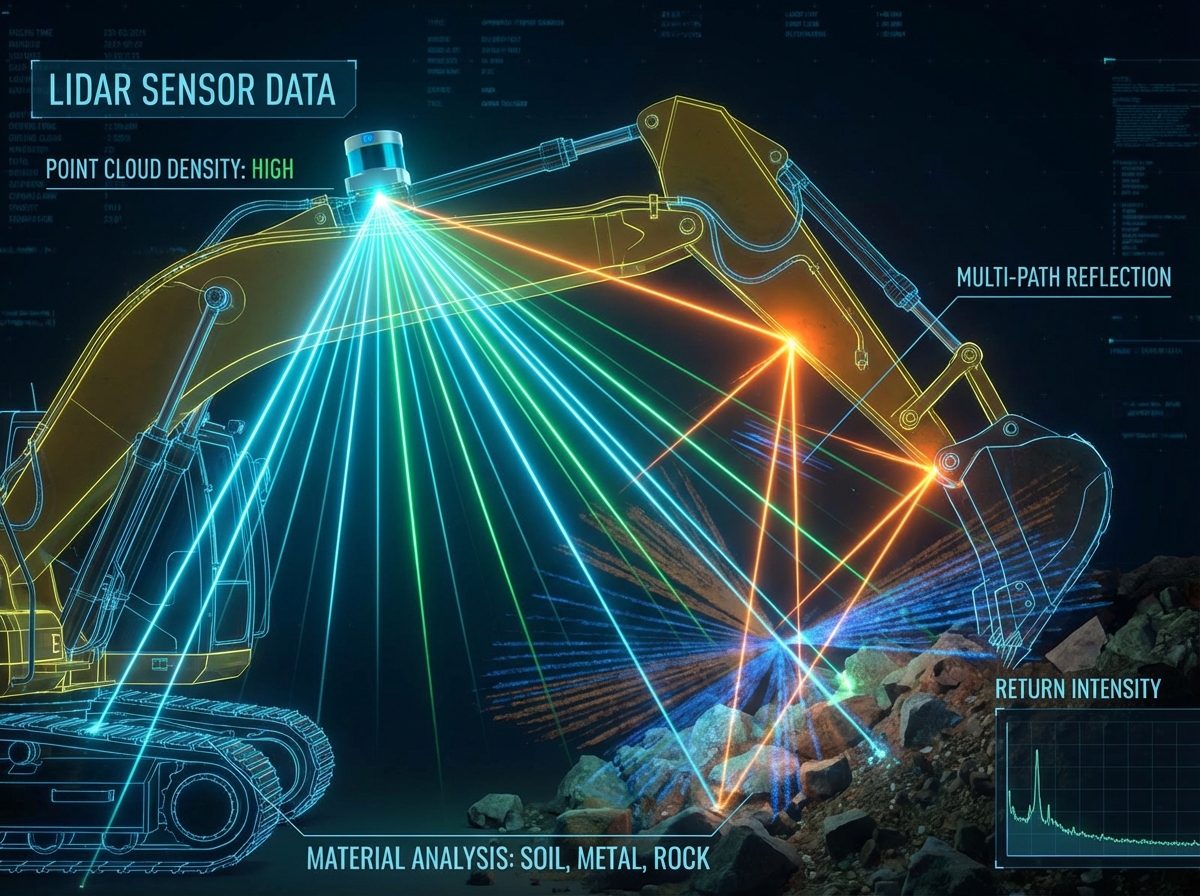

We ingest your vehicle model, sensor suite, Operational design domain and to generate millions of baseline training frames. Our generative world models apply domain randomization—shifting weather, lighting, and soil textures—to create a robust initial policy without a single hour of real-world operation.

Stage 2: Active Deployment & Harvesting

Targeted Data Collection

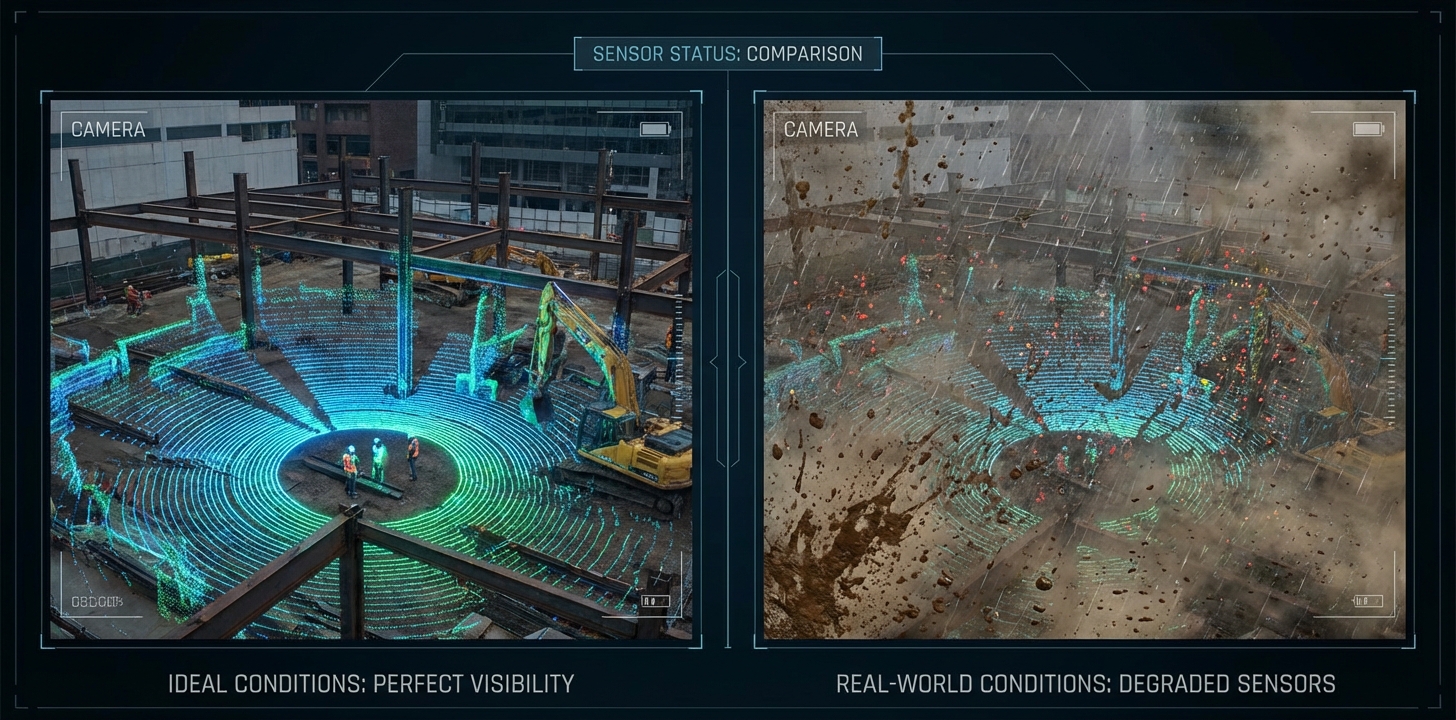

Deploy the model to the field. As your machines operate, our system automatically flags and uploads 'low-confidence' events—moments where the AI was uncertain or the operator had to intervene—filtering out terabytes of empty data.

Stage 3: Real-to-Sim Reconstruction

Automated Root Cause Analysis

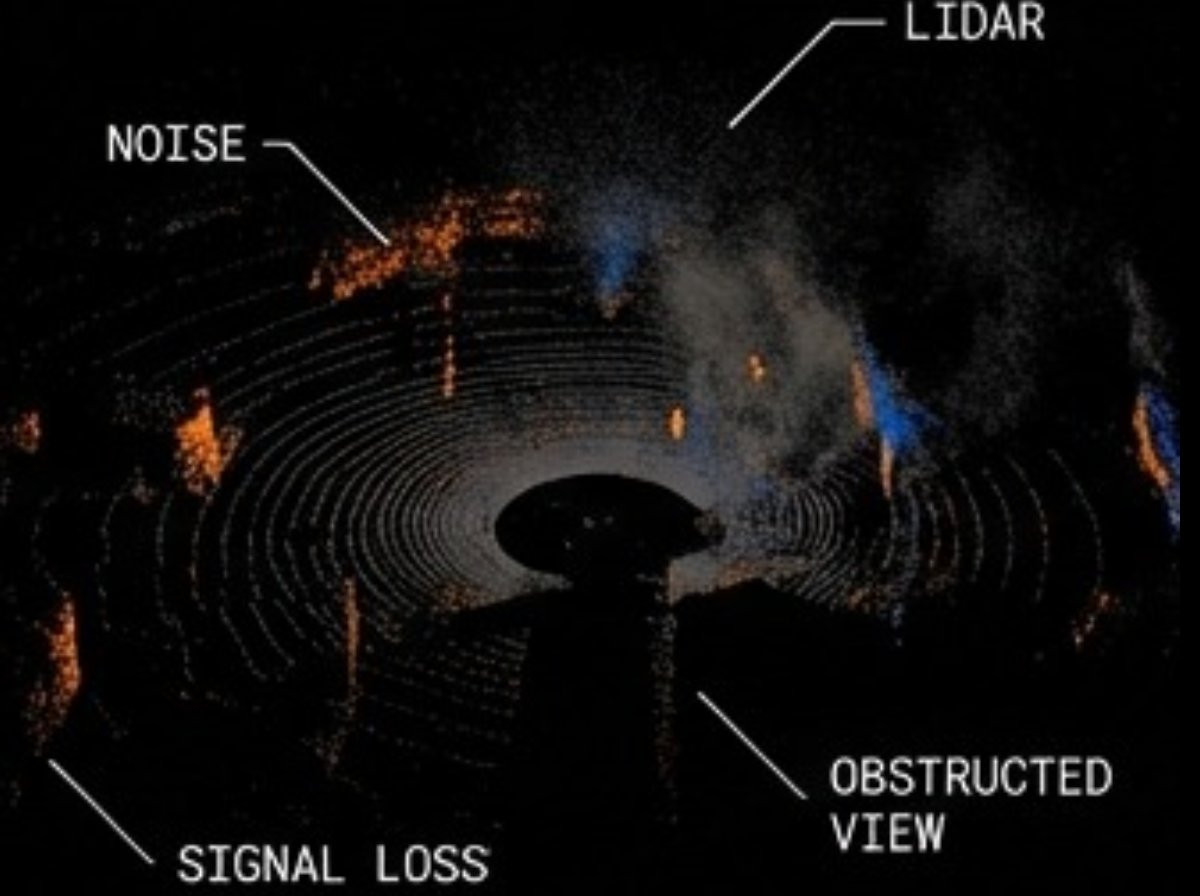

The system ingests real-world failure logs and automatically reconstructs the exact scenario in simulation. We compare the model's prediction against the operator's actual ground truth to mathematically identify why the failure occurred.

Stage 4: Adversarial Amplification

Adversarial Fine-Tuning

This is where we close the gap. The platform takes that single real-world failure and uses Generative AI to spawn 10,000 'harder' variations—adding blinding dust, sensor noise, or slippery mud. The model is fine-tuned on this hyper-targeted 'Gold' dataset to master the edge case.